Videoflow (default)

C++ (Windows, Linux, MacOS / CUDA and Metal accelerated) port of VideoFlow. This is a bidirectional optical flow model making better use of temporal cues.

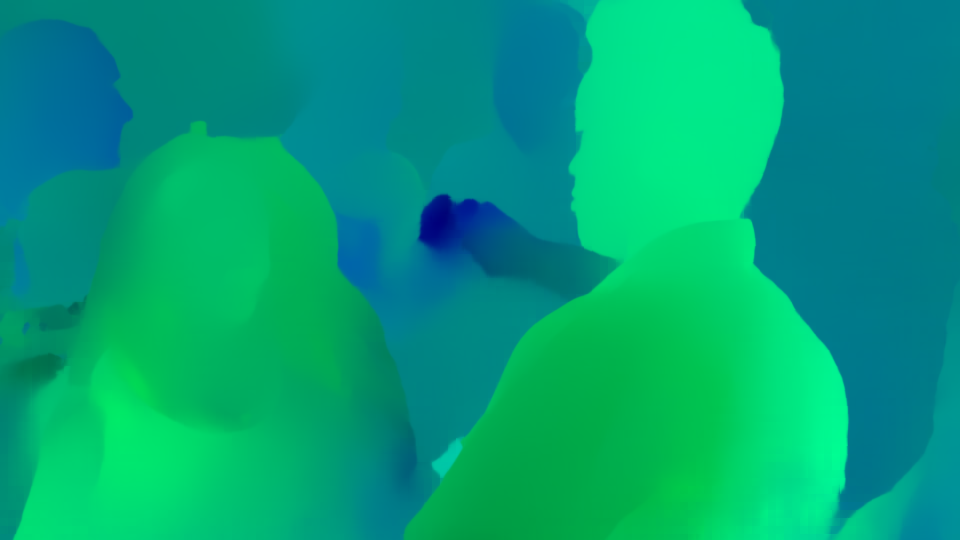

Example Input & Outputs

| Inputs | Outputs |

|

|

Demo Code

1#include "blace_ai.h"

2#include "videoflow_v1_default_v1_ALL_export_version_v25.h"

3#include <opencv2/opencv.hpp>

4

5using namespace blace;

6

7int main() {

8 ::workload_management::BlaceWorld blace;

9 auto exe_path = util::getPathToExe();

10 auto frame_0_op = CONSTRUCT_OP(

11 ops::FromImageFileOp((exe_path / "videoflow_frame_0.png").string()));

12 auto frame_1_op = CONSTRUCT_OP(

13 ops::FromImageFileOp((exe_path / "videoflow_frame_1.png").string()));

14 auto frame_2_op = CONSTRUCT_OP(

15 ops::FromImageFileOp((exe_path / "videoflow_frame_2.png").string()));

16

17 // interpolate to half size to save memory

18 frame_0_op = CONSTRUCT_OP(ops::Interpolate2DOp(

19 frame_0_op, 540, 960, ml_core::BILINEAR, false, true));

20 frame_1_op = CONSTRUCT_OP(ops::Interpolate2DOp(

21 frame_1_op, 540, 960, ml_core::BILINEAR, false, true));

22 frame_2_op = CONSTRUCT_OP(ops::Interpolate2DOp(

23 frame_2_op, 540, 960, ml_core::BILINEAR, false, true));

24

25 // construct model inference arguments

26 ml_core::InferenceArgsCollection infer_args;

27 infer_args.inference_args.backends = {

28 ml_core::TORCHSCRIPT_CUDA_FP16, ml_core::TORCHSCRIPT_MPS_FP16,

29 ml_core::TORCHSCRIPT_CUDA_FP32, ml_core::TORCHSCRIPT_MPS_FP32,

30 ml_core::ONNX_DML_FP32, ml_core::TORCHSCRIPT_CPU_FP32};

31

32 // construct inference operation. the model returns two values, flow 1->0 and

33 // flow 1->2

34 auto flow_1_to_0 = videoflow_v1_default_v1_ALL_export_version_v25_run(

35 frame_0_op, frame_1_op, frame_2_op, 0, infer_args,

36 util::getPathToExe().string());

37 auto flow_1_to_2 = videoflow_v1_default_v1_ALL_export_version_v25_run(

38 frame_0_op, frame_1_op, frame_2_op, 1, infer_args,

39 util::getPathToExe().string());

40

41 // normalize optical flow to zero-one range for plotting. The model returns

42 // relative offsets in -1 to 1 pixel space, so the raw values are to small to

43 // plot

44 flow_1_to_0 = CONSTRUCT_OP(ops::NormalizeToZeroOneOP(flow_1_to_0));

45 flow_1_to_0 = CONSTRUCT_OP(ops::ToColorOp(flow_1_to_0, ml_core::RGB));

46

47 flow_1_to_2 = CONSTRUCT_OP(ops::NormalizeToZeroOneOP(flow_1_to_2));

48 flow_1_to_2 = CONSTRUCT_OP(ops::ToColorOp(flow_1_to_2, ml_core::RGB));

49

50 // construct evaluator and evaluate to opencv mat

51 computation_graph::GraphEvaluator evaluator_0(flow_1_to_0);

52 auto [return_code_0, flow_1_to_0_cv] = evaluator_0.evaluateToCVMat();

53 computation_graph::GraphEvaluator evaluator_1(flow_1_to_2);

54 auto [return_code_1, flow_1_to_2_cv] = evaluator_1.evaluateToCVMat();

55

56 // multipy for for plotting

57 flow_1_to_0_cv *= 255;

58 flow_1_to_2_cv *= 255;

59

60 cv::imwrite((exe_path / "optical_flow_1_to_0.png").string(), flow_1_to_0_cv);

61 cv::imwrite((exe_path / "optical_flow_1_to_2.png").string(), flow_1_to_2_cv);

62

63 return 0;

64}

Tested on version v0.9.96 of blace.ai sdk. Might also work on newer or older releases (check if release notes of blace.ai state breaking changes).

Quickstart

- Download blace.ai SDK and unzip. In the bootstrap script

build_run_demos.ps1(Windows) orbuild_run_demos.sh(Linux/MacOS) set theBLACE_AI_CMAKE_DIRenvironment variable to thecmakefolder inside the unzipped SDK, e.g.export BLACE_AI_CMAKE_DIR="<unzip_folder>/package/cmake". - Download the model payload(s) (

.binfiles) from below and place in the same folder as the bootstrapper scripts. - Then run the bootstrap script with

powershell build_run_demo.ps1(Windows)

sh build_run_demo.sh(Linux and MacOS).

This will build and execute the demo.

Supported Backends

| Torchscript CPU | Torchscript CUDA FP16 * | Torchscript CUDA FP32 * | Torchscript MPS FP16 * | Torchscript MPS FP32 * | ONNX CPU FP32 | ONNX DirectML FP32 * |

|---|---|---|---|---|---|---|

| ✅ | ✅ | ✅ | ✅ | ✅ | ❌ | ❌ |

(*: Hardware Accelerated)

Artifacts

| Torchscript Payload | Demo Project | Header |